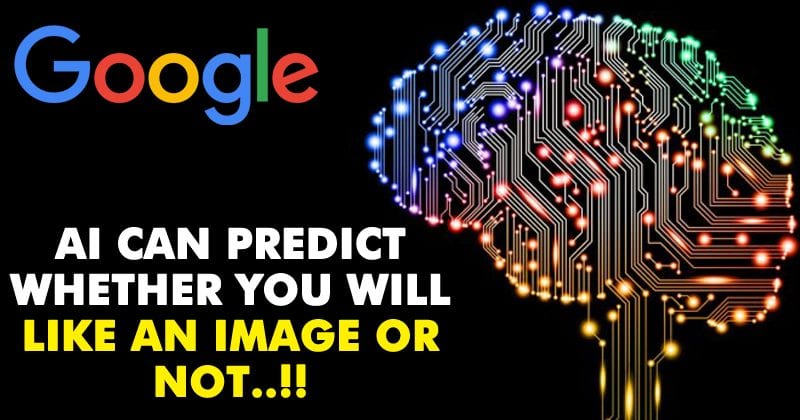

It was in May of this same year when the tech giant Google’s Brain researchers announced the creation of this initiative, an automatic learning algorithm that learns to build other machine learning algorithms. The intention was to see what an artificial intelligence was capable of, creating another artificial intelligence without human intervention, with the ultimate aim of achieving greater deployment of these technologies. There are few humans capable of developing them, they are highly coveted and similar projects would help bring artificial intelligence to many other fields and companies, much more quickly. Otherwise, more slowness would imply a great risk for the AI itself, according to experts like Dave Heiner, the tech giant Microsoft’s advisor. Part of its success implies that the implementation is broad. As the tech giant Google CEO, Sundar Pichai, boasted about AutoML during the presentation of Pixel 2 and Pixel 2 XL and today could again boast to show what has achieved this promising initiative. By automating the design of machine learning models using an approach called reinforcement learning, as they explain in Futurism, the researchers made this artificial intelligence act as a neural network of controllers that, in turn, creates another network of artificial minor intelligence. However, a creation, called NASNet, that has surpassed all its counterparts built by humans. Its function is to recognize objects in videos broadcast in real time. It must identify people, cars, bags, backpacks and other elements present in the images. AutoML evaluates the performance and, with these data, independently perfects this artificial intelligence by repeating the process thousands of times. An expensive task, usually done by humans, but essential. By scrolling through your feed on Instagram, you can easily rate your friends’ photos. Is it aesthetically pleasing? What grade do you give? For a computer, this is not a simple task. But the tech giant Google’s artificial intelligence has managed to do just that: it predicts whether humans will like an image or not. The tech giant Google detailed the study with the Neural Image Assessment (NIMA), something like neural imaging. Simply put, NIMA is trained with a series of images and their respective notes (given in photo contests, for example). Then it can technically and aesthetically evaluate any photo, assigning a score of 1 to 10, as a human. NIMA’s assessments are very close to those that would be made by a human. For example, these are NIMA’s photographs and notes (in enclosures, the medians of the scores given by homo sapiens):-

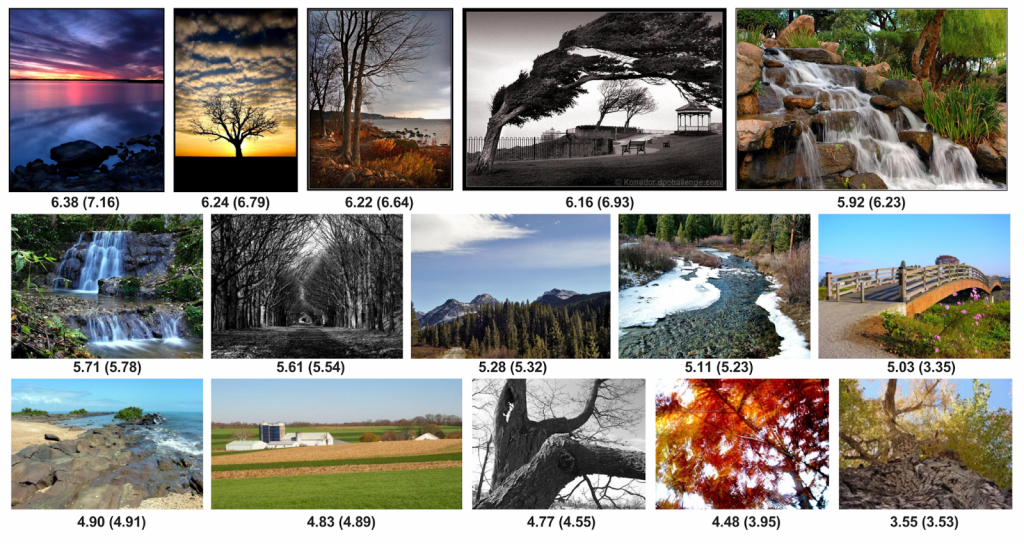

Artificial intelligence takes into account the technical quality of the image. Does it have noises? Compression distortions? All of this influences the note, which may fall from a reasonable 5.93 to a shameful 1.39 with the same framing and subject matter:-

But what good is an artificial intelligence that gives a note to a photo? Knowing beforehand how a human would evaluate an image, it is possible to develop photography software features more accurately (such as those that adjust exposure, contrast, and colour automatically), or even improve post-processing of smartphone cameras, for example. So, what do you think about this? Simply share your views and thoughts in the comment section below.